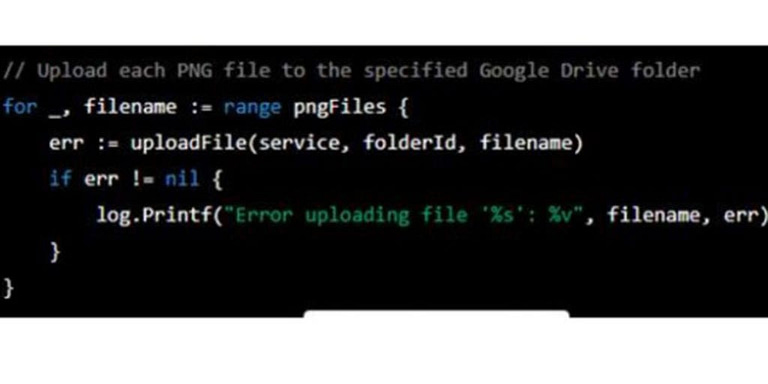

In the world of cybersecurity, prompt injection attacks are becoming increasingly difficult to defend against. Prompt injection is a type of attack that uses artificial intelligence (AI) to inject malicious code into an application or system. This type of attack can be used to gain access to sensitive data and disrupt operations.

Prompt injection attacks are particularly dangerous because they use AI algorithms to bypass traditional security measures such as firewalls and antivirus software. The attackers use machine learning techniques to identify weaknesses in the target system and then exploit them by injecting malicious code into the application or system. This makes it difficult for organizations to detect these types of attacks until it’s too late.

Simon Willison, a computer scientist at Google Research, recently presented his research on how AI-based prompt injection works at the USENIX Security Symposium 2021 conference in San Diego, California. In his presentation, he discussed how attackers can leverage machine learning models trained on large datasets in order to generate malicious payloads that can evade detection from existing security systems. He also demonstrated how this technique could be used in real-world scenarios such as phishing campaigns and ransomware attacks.

Willison’s research highlights just how hard it is for organizations today to protect themselves from these types of sophisticated cyberattacks using traditional methods alone. To effectively defend against AI prompt injections requires a multi-layered approach that includes both proactive measures such as monitoring user behavior for suspicious activity and reactive measures like patching vulnerable applications quickly when new threats emerge. Additionally, organizations should invest in advanced technologies such as artificial intelligence (AI) solutions which can help detect anomalies more quickly than humans alone ever could before they become major problems down the line..

Organizations must remain vigilant if they want to stay ahead of potential threats posed by AI prompt injections; otherwise their networks may become compromised with little warning or recourse available afterwards due diligence has been done after an incident occurs . It’s clear that defending against these kinds of sophisticated cyberattacks will require significant investments in both technology and personnel training so businesses can stay one step ahead of hackers who are constantly evolving their tactics .

|Why it’s Hard To Defend Against AI Prompt Injection Attacks|Cybersecurity|The Register